Analyze Reddit Communities with RAG to find pains and ICPs

Analyzing Reddit Content with an n8n Workflow

In this article I provide a step-by-step guide to building an advanced n8n workflow for extracting, analyzing, and categorizing Reddit content.

As a platform rich with user-generated content, Reddit is a valuable resource for marketers, researchers, and content creators. By leveraging n8n, an open-source automation tool, you can turn raw data into actionable insights.

Workflow Objectives in short

The described workflow enables you to:

- Collect data from Reddit: Identify relevant posts using specific keywords and filters.

- Pre-process the data: Filter and categorize extracted data based on your topic of interest.

- Identify potential users: Define ideal customer profiles (ICPs) by analyzing the content context.

By using tools like the Reddit API and OpenAI models, this workflow allows detailed, targeted analysis, saving time and resources. You can always improve it, adding new workflows as sources (Twitter, Linkedin, etc.) adding much more information to the AI with profiles identifications...

Prerequisites

Before starting, ensure you have the following:

- N8N installed: You can run n8n locally or use a cloud instance. Refer to the official installation guide.

- Reddit API credentials: Register an app in your Reddit account to obtain the

client_idandclient_secret. You can go here (reddit url) to create fastly the app and get the credentials to start. - OpenAI API key: Request it on OpenAI's website to access GPT models.

- Relevant keywords: Clearly define search terms to maximize results. Example you can use single words (max 2) – this demo is not crafted to search into Reddit complex keywords.

Workflow Setup

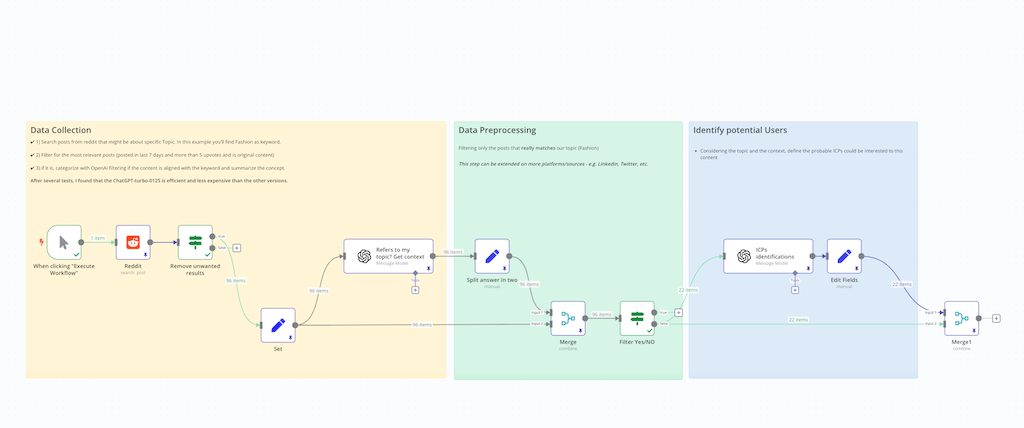

Below a description of the 3 steps of this N8N workflow.

1. Data Collection

I pull all information and data from more subreddits, searching for a specific keyword. The results will be filtered and match the content to the topic/keyword.

1.1 Workflow Trigger

Start the workflow using a trigger like "Webhook" or "Execute Workflow." This allows you to manually run the process to test and refine results.

1.2 Extracting Posts

- Configure the Reddit node:

- Search type:

post. - Keywords: Define specific terms (e.g., "Fashion").

- Filters: Posts from the last 7 days, with at least 5 upvotes, and containing original content.

- Search type:

1.3 Filtering Results

- Use a filter node to remove irrelevant posts.

- Conditions: The title or body of the post must include the keyword.

- This step reduces noise and enhances the efficiency of later stages.

1.4 Categorization with OpenAI

- Configure an OpenAI node:

- Prompt: Provide clear instructions to evaluate the relevance of content.

- Example prompt: "Analyze the following text to check its relevance to the topic 'Fashion.' Summarize the main content."

2. Data Pre-Processing

Analyze and process the data pulled. Here the OpenAI node ask for summarize and context in one prompt.

2.1 Advanced Filtering

Refine the results with more specific criteria, such as semantic relevance.

2.2 Categorization

Use a manual node to classify the content into categories (e.g., "Trends," "Discussions," "Insights").

This step makes the data more accessible for subsequent analyses.

2.3 Data Consolidation

Combine the filtered data using the Merge node to create a cohesive dataset.

3. Identifying Potential Users

Generate ICPs based on the context and topics analyzed.

3.1 User Profiling

Use an OpenAI node to identify ideal customer profiles (ICPs). Analyze the context to segment users based on interests or demographics.

3.2 Editing Fields

Manually refine the ICP fields to ensure the accuracy of the final dataset.

3.3 ICP Data Integration

Merge ICP data into the main dataset for easy consultation and strategic use.

4.Optimization and Tips

- Fine-Tune Filters: Experiment with keywords and upvote thresholds to improve relevance.

- Leverage Cost-Effective AI Models: GPT-turbo-0125 is an efficient and affordable option.

- Automate Data Collection: Set up a "Cron" trigger for regular executions.

- Expand to Other Sources: Include LinkedIn and Twitter for broader analysis.

- Integrate with Dashboards: Use visualization tools to monitor results.

Troubleshooting Common Issues

Keep attention on single services and their rate limits. This can be hassle and unexpected issue to manage and fix.

API Limits

- Problem: Exceeding Reddit API limits.

- Solution: Implement pagination and throttling in n8n.

Inaccurate Categorization

- Problem: AI models produce inconsistent results.

- Solution: Refine prompts and include examples to improve output.

Download this workflow by clicking link below

Subscribe to get COMPLETE access to all workflows of my articles.